Abstract

Key Contributions

-

We developed ExeCoder, a LLM specifically designed for code translation, which significantly outperforms all other open-source code LLMs, achieving SOTA performance. Notably, the ExeCoder surpasses well-known the renowned closed-source LLM GPT-4o.

-

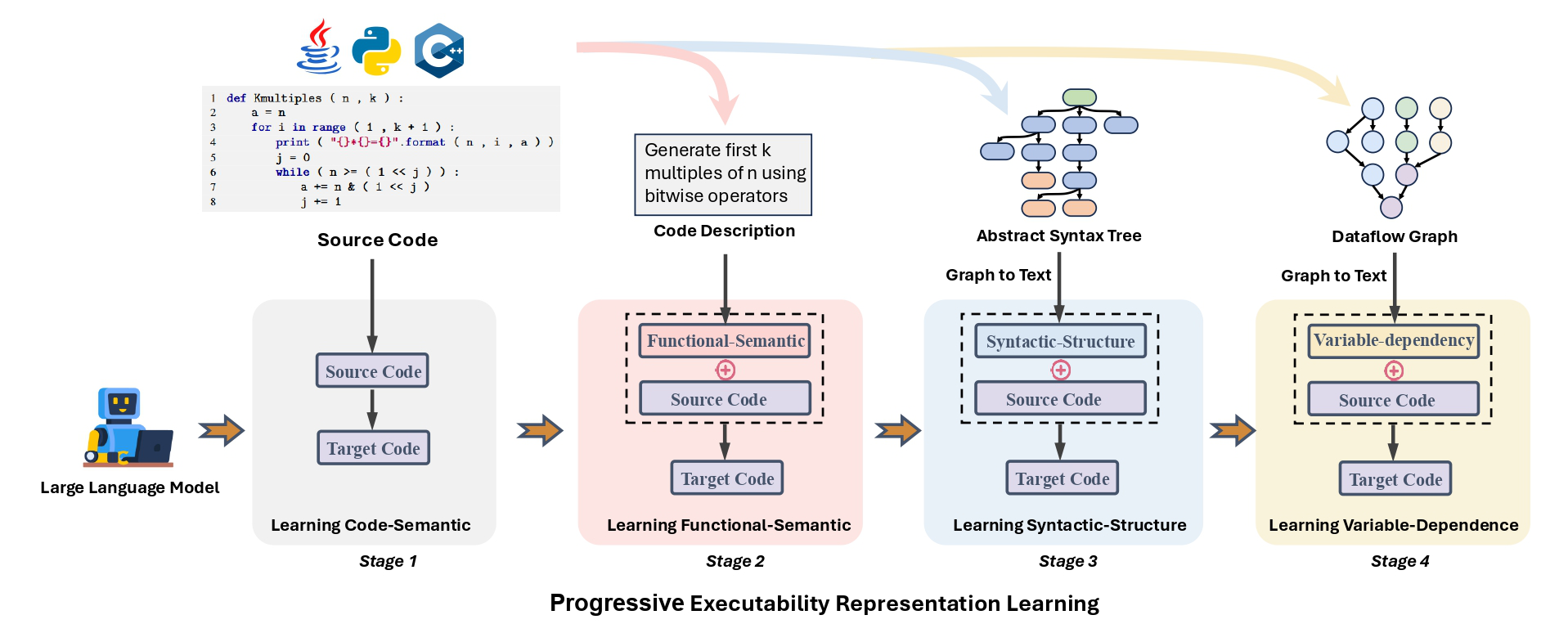

We propose a Progressive Executability Representation Learning strategy that aligns with the learning theory of programming experts and effectively learns executability representations of code.

-

We enhanced the widely used code translation benchmark, TransCoder-test, resulting in a new benchmark called TransCoder-test-X, which is capable of evaluating the code translation abilities of LLMs.

-

We conducted a preliminary study that emphasizes the critical role of executability representations of code in achieving excellent code translation performance.

Main Ideas

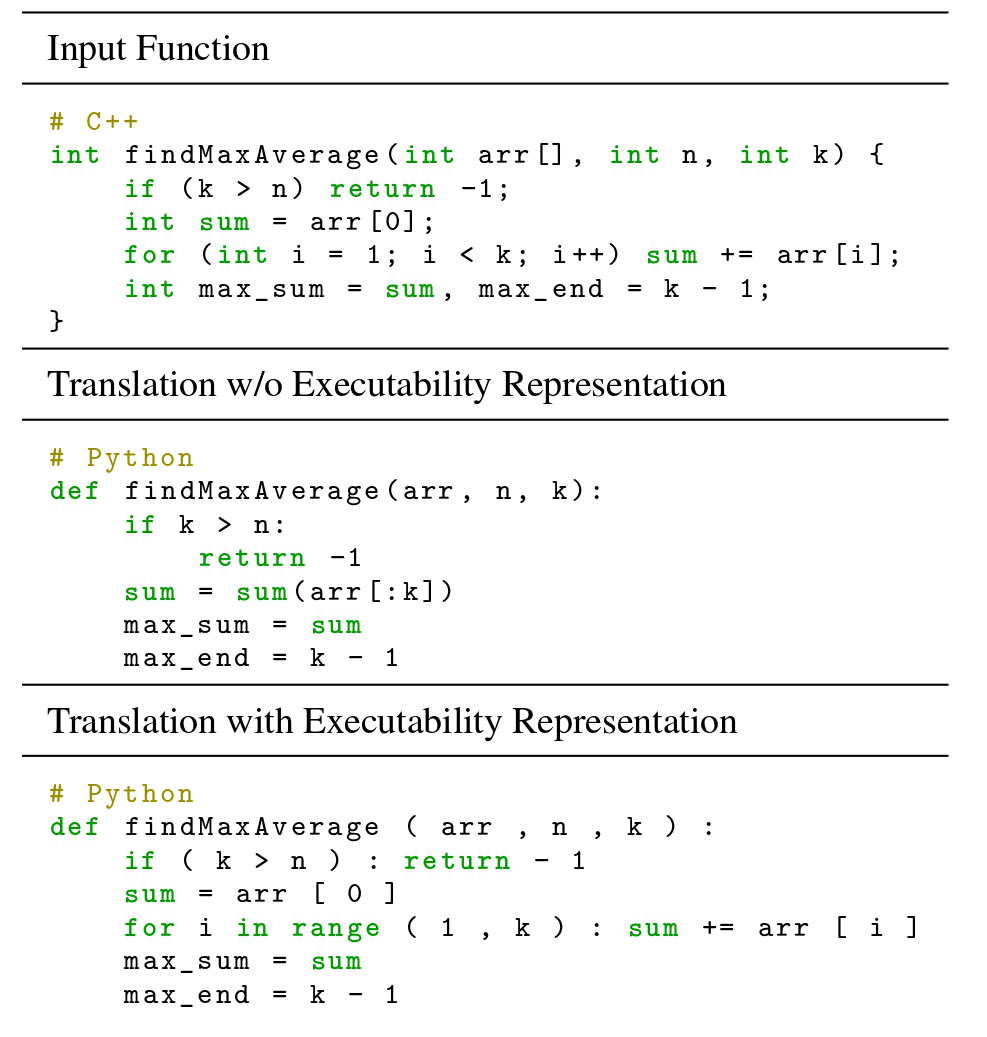

Figure 1: Executability Representation for Code Translation. Existing models simply copy variable name from the source code, ignoring conflicts with called built-in function, which leads to TypeError exception. The model that considers code executability learn the syntactic structure of source code and avoid call conflicts through explicit loop summation.

Figure 2: The ExeCoder's pipeline. The ExeCoder first utilizes a text that describes the code functionality to encode functional semantic information. Subsequently, the ExeCoder uses an abstract syntax tree (AST) to encode the syntactic structure information. The AST has proven effective in encoding both lexical and syntactic information. Finally, the ExeCoder employs a data flow graph (DFG) to encode the variable dependency information. The DFG tracks the data dependency relationships between variables. With the benefit of the well-designed Progressive Executability Representation Learning (PERL) strategy, the ExeCoder can fully leverage the progressively refined code executability representation to enhance the cross-programming language understanding capabilities of LLMs.

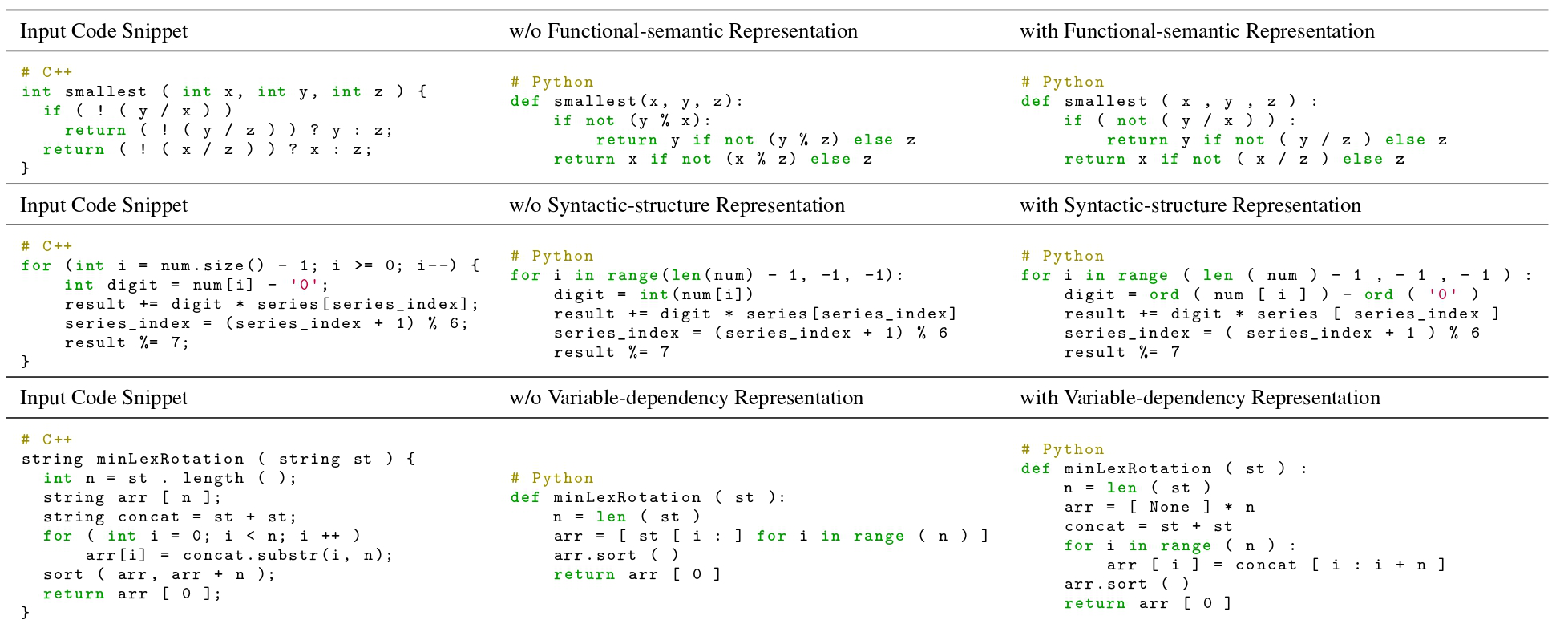

Figure 3: Three types of Executability Representations. The first example illustrates an error related to functional semantic, where the baseline model is not informed of the function's role in obtaining the minimum number, substituting a similar modulus symbol for the division operator. The second example highlights an error in syntactic structure, where the baseline model uses forced type conversion to convert characters to numbers, which raises a ValueError exception when non-numeric characters are included in the input. The third example presents an error regarding variable dependency, where the baseline model has not learned the transmission of variables, thereby neglecting to create a concatenation of string with itself. These errors result in a minimal edit distance but have a significant impact on the execution. Learning the executability representation can indicate the execution status, aiding in resolving these issues.

Citation

@misc{he2025execoderempoweringlargelanguage,

title={ExeCoder: Empowering Large Language Models with Executability Representation for Code Translation},

author={Minghua He and Fangkai Yang and Pu Zhao and Wenjie Yin and Yu Kang and Qingwei Lin and Saravan Rajmohan and Dongmei Zhang and Qi Zhang},

year={2025},

eprint={2501.18460},

archivePrefix={arXiv},

primaryClass={cs.SE},

url={https://arxiv.org/abs/2501.18460},

}